Competition —

Once computationally impossible, AIs now translate protein sequence to structure.

John Timmer

–

Thanks to the development of DNA-sequencing technology, it has become trivial to obtain the sequence of bases that encode a protein and translate that to the sequence of amino acids that make up the protein. But from there, we often end up stuck. The actual function of the protein is only indirectly specified by its sequence. Instead, the sequence dictates how the amino acid chain folds and flexes in three-dimensional space, forming a specific structure. That structure is typically what dictates the function of the protein, but obtaining it can require years of lab work.

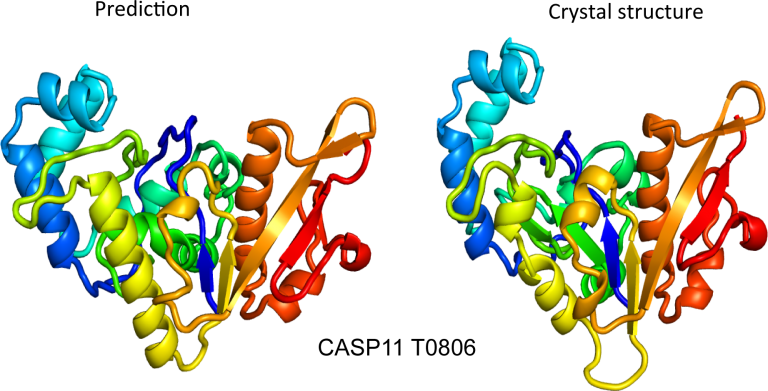

For decades, researchers have tried to develop software that can take a sequence of amino acids and accurately predict the structure it will form. Despite this being a matter of chemistry and thermodynamics, we’ve only had limited success—until last year. That’s when Google’s DeepMind AI group announced the existence of AlphaFold, which can typically predict structures with a high degree of accuracy.

At the time, DeepMind said it would give everyone the details on its breakthrough in a future peer-reviewed paper, which it finally released yesterday. In the meantime, some academic researchers got tired of waiting, took some of DeepMind’s insights, and made their own. The paper describing that effort also was released yesterday.

The dirt on AlphaFold

DeepMind already described the basic structure of AlphaFold, but the new paper provides much more detail. AlphaFold’s structure involves two different algorithms that communicate back and forth regarding their analyses, allowing each to refine their output.

One of these algorithms looks for protein sequences that are evolutionary relatives of the one at issue, and it figures out how their sequences align, adjusting for small changes or even insertions and deletions. Even if we don’t know the structure of any of these relatives, they can still provide important constraints, telling us things like whether certain parts of the protein are always charged.

The AlphaFold team says that this portion of things needs about 30 related proteins to function effectively. It typically comes up with a basic alignment quickly, then refines it. These sorts of refinements can involve shifting gaps around in order to place key amino acids in the right place.

The second algorithm, which runs in parallel, splits the sequence into smaller chunks and attempts to solve the structure of each of these while ensuring the structure of each chunk is compatible with the larger structure. This is why aligning the protein and its relatives is essential; if key amino acids end up in the wrong chunk, then getting the structure right is going to be a real challenge. So, the two algorithms communicate, allowing proposed structures to feed back to the alignment.

The structural prediction is a more difficult process, and the algorithm’s original ideas often undergo more significant changes before the algorithm settles into refining the final structure.

Perhaps the most interesting new detail in the paper is where DeepMind goes through and disables different portions of the analysis algorithms. These show that, of the nine different functions they define, all seem to contribute at least a little bit to the final accuracy, and only one has a dramatic effect on it. That one involves identifying the points in a proposed structure that are likely to need changes and flagging them for further attention.

The competition

In an announcement timed for the paper’s release, DeepMind CEO Demis Hassabis said, “We pledged to share our methods and provide broad, free access to the scientific community. Today, we take the first step towards delivering on that commitment by sharing AlphaFold’s open-source code and publishing the system’s full methodology.”

But Google had already described the system’s basic structure, which caused some researchers in the academic world to ponder whether they could adapt their existing tools to a system structured more like DeepMind’s. And, with a seven-month lag, the researchers had plenty of time to act on that idea.

The researchers used DeepMind’s initial description to identify five features of AlphaFold that they felt differed from most existing methods. So, they attempted to implement different combinations of these features and figure out which ones resulted in improvements over current methods.

The simplest thing to get to work was having two parallel algorithms: one dedicated to aligning sequences, the other performing structural predictions. But the team ended up splitting the structural portion of things into two distinct functions. One of those functions simply estimates the two-dimensional distance between individual parts of the protein, and the other handles the actual location in three-dimensional space. All three of them exchange information, with each providing the others hints on what aspects of its task might need further refinement.

The problem with adding a third pipeline is that it significantly boosts the hardware requirements, and academics in general don’t have access to the same sorts of computing assets that DeepMind does. So, while the system, called RoseTTAFold, didn’t perform as well as AlphaFold in terms of the accuracy of its predictions, it was better than any previous systems that the team could test. But, given the hardware it was run on, it was also relatively fast, taking about 10 minutes when run on a protein that’s 400 amino acids long.

Like AlphaFold, RoseTTAFold splits up the protein into smaller chunks and solves those individually before trying to put them together into a complete structure. In this case, the research team realized that this might have an additional application. A lot of proteins form extensive interactions with other proteins in order to function—hemoglobin, for example, exists as a complex of four proteins. If the system works as it should, feeding it two different proteins should allow it to both figure out both of their structures and where they interact with each other. Tests of this showed that it actually works.

Healthy competition

Both of these papers seem to describe positive developments. To start with, the DeepMind team deserves full credit for the insights it had into structuring its system in the first place. Clearly, setting things up as parallel processes that communicate with each other has produced a major leap in our ability to estimate protein structures. The academic team, rather than simply trying to reproduce what DeepMind did, just adopted some of the major insights and took them in new directions.

Right now, the two systems clearly have performance differences, both in terms of the accuracy of their final output and in terms of the time and compute resources that need to be dedicated to it. But with both teams seemingly committed to openness, there’s a good chance that the best features of each can be adopted by the other.

Whatever the outcome, we’re clearly in a new place compared to where we were just a couple of years ago. People have been trying to solve protein-structure predictions for decades, and our inability to do so has become more problematic at a time when genomes are providing us with vast quantities of protein sequences that we have little idea how to interpret. The demand for time on these systems is likely to be intense, because a very large portion of the biomedical research community stands to benefit from the software.

Science, 2021. DOI: 10.1126/science.abj8754

Nature, 2021. DOI: 10.1038/s41586-021-03819-2 (About DOIs).