Hand-to-cancel culture —

New laws bar efforts to trick consumers into handing over data or money.

Tom Simonite, wired.com

–

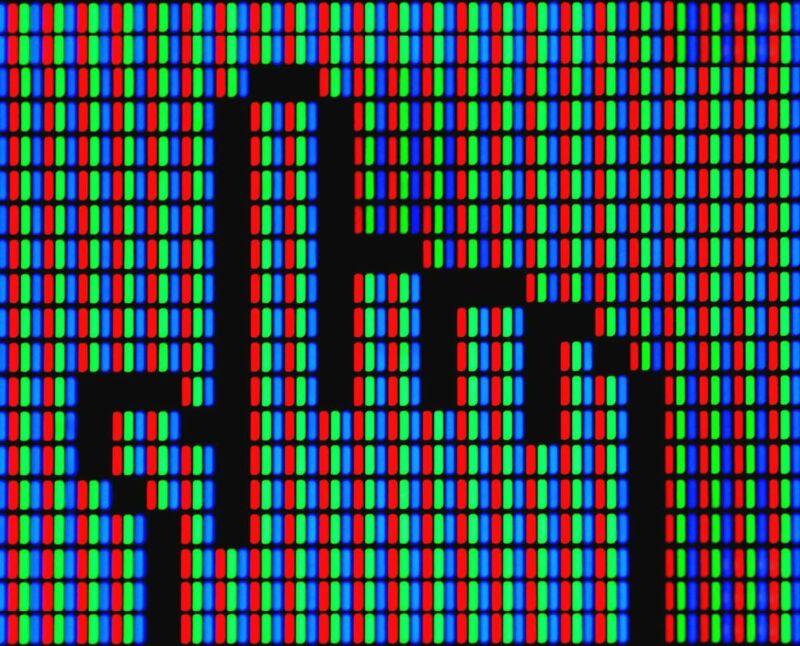

In 2010, British designer Harry Brignull coined a handy new term for an everyday annoyance: dark patterns, meaning digital interfaces that subtly manipulate people. It became a term of art used by privacy campaigners and researchers. Now, more than a decade later, the coinage is gaining new, legal, heft.

Dark patterns come in many forms and can trick a person out of time or money or into forfeiting personal data. A common example is the digital obstacle course that springs up when you try to nix an online account or subscription, such as for streaming TV, asking you repeatedly if you really want to cancel. A 2019 Princeton survey of dark patterns in e-commerce listed 15 types of dark patterns, including hurdles to canceling subscriptions and countdown timers to rush consumers into hasty decisions.

A new California law approved by voters in November will outlaw some dark patterns that steer people into giving companies more data than they intended. The California Privacy Rights Act is intended to strengthen the state’s landmark privacy law. The section of the new law defining user consent says that “agreement obtained through use of dark patterns does not constitute consent.”

That’s the first time the term dark patterns has appeared in US law but likely not the last, says Jennifer King, a privacy specialist at the Stanford Institute for Human-Centered Artificial Intelligence. “It’s probably going to proliferate,” she says.

State senators in Washington this month introduced their own state privacy bill—a third attempt at passing a law that, like California’s, is motivated in part by the lack of broad federal privacy rules. This year’s bill copies verbatim California’s prohibition on using dark patterns to obtain consent. A competing bill unveiled Thursday and backed by the ACLU of Washington does not include the term.

King says other states, and perhaps federal lawmakers emboldened by Democrats gaining control of the US Senate, may follow suit. A bipartisan duo of senators took aim at dark patterns with 2019’s failed Deceptive Experiences to Online Users Reduction Act, although the law’s text didn’t use the term.

California’s first-in-the-nation status on regulating dark patterns comes with a caveat. It’s not clear exactly which dark patterns will become illegal when the new law takes full effect in 2023; the rules are to be determined by a new California Privacy Protection Agency that won’t start operating until later this year. The law defines a dark pattern as “a user interface designed or manipulated with the substantial effect of subverting or impairing user autonomy, decision-making, or choice, as further defined by regulation.”

James Snell, a partner specializing in privacy at the law firm Perkins Coie in Palo Alto, California, says it’s so far unclear whether or what specific rules the privacy agency will craft. “It’s a little unsettling for businesses trying to comply with the new law,” he says.

Snell says clear boundaries on what’s acceptable—such as restrictions on how a company obtains consent to use personal data—could benefit both consumers and companies. The California statute may also end up more notable for the law catching up with privacy lingo, rather than a dramatic extension of regulatory power. “It’s a cool name but really just means you’re being untruthful or misleading, and there are a host of laws and common law that already deal with that,” Snell says.

Alastair Mactaggart, the San Francisco real estate developer who propelled the CPRA and also helped create the law it revised, says dark patterns were added in an effort to give people more control of their privacy. “The playing field is not remotely level, because you have the smartest minds on the planet trying to make that as difficult as possible for you,” he says. Mactaggart believes that the rules on dark patterns should eventually empower regulators to act against tricky behavior that now escapes censure, such as making it easy to allow tracking on the Web but extremely difficult to use the opt-out that California law requires.

King, of Stanford, says that’s plausible. Enforcement by US privacy regulators is generally focused on cases of outright deception. California’s dark patterns rules could allow action against plainly harmful tricks that fall short of that. “Deception is about planting a false belief, but dark patterns are more often a company leading you along a prespecified path, like coercion,” she says.

This story originally appeared on wired.com.